Keep Your Engineering Metrics Simple

2021-03-03 - Engineering Intelligence

Every week I stumble upon articles or online conversations about engineering metrics. The content often advocates a particular set of metrics.

I believe that engineering metrics are precious but need to be adjusted to the team or organization's context. Otherwise, you might optimize metrics that are not beneficial for your specific context. Therefore, I want to share my thoughts behind my metrics and encourage you to think about your engineering process and which metrics can benefit your goals.

A few years ago, I defined a simple set of metrics that I want to check regularly, usually every week. Those metrics all count on our engineering philosophy "Ship software constantly with high quality."

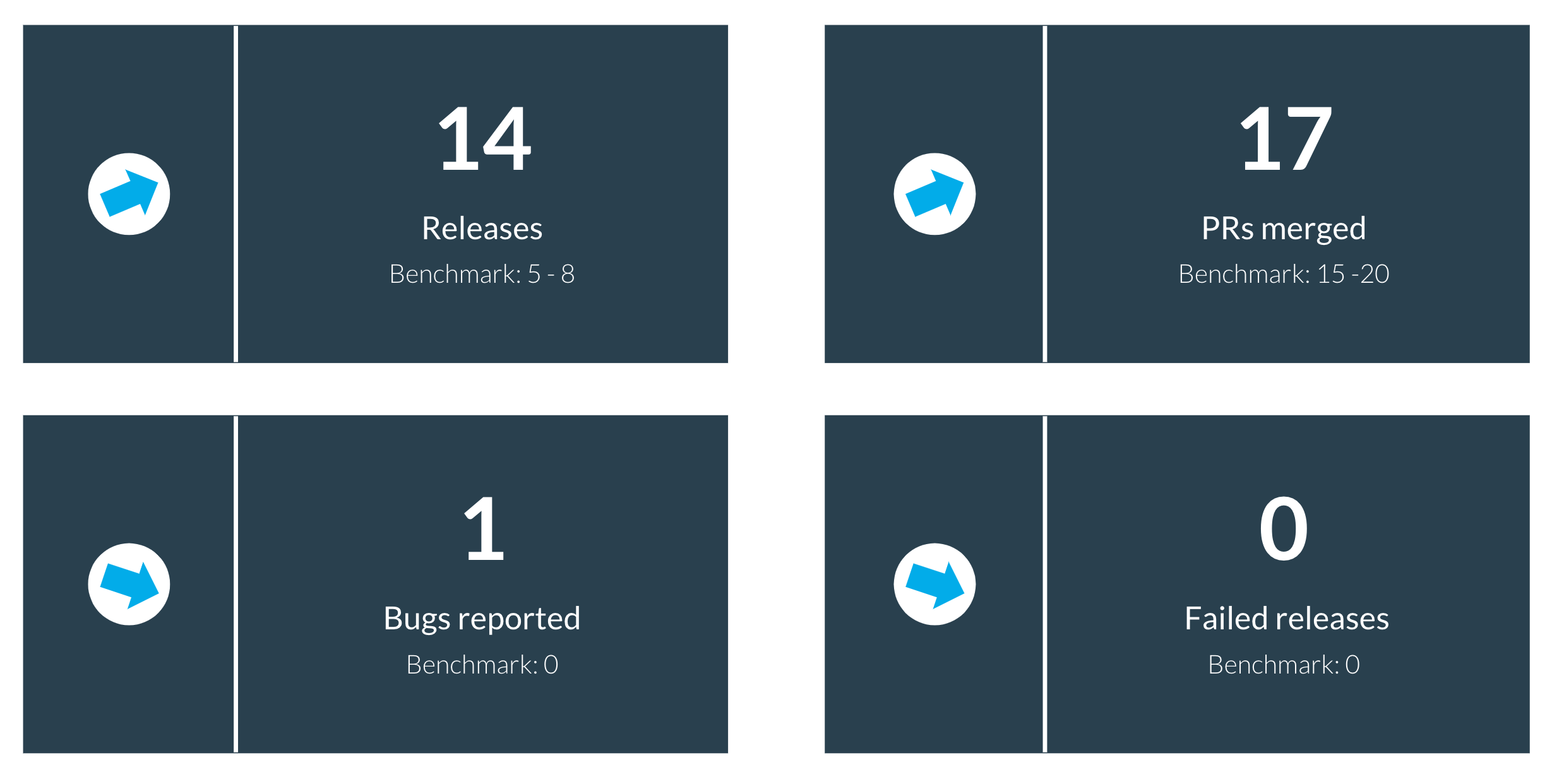

- Releases: The number of times the team releases changes to production

- PRs merged: The number of times the team merged and closed PRs

- Bugs reported: The number of reported bugs

- Failed releases: The number of releases that consist of a bug according to the specs

You can ask now why did you choose those metrics? A valid question because there are many other metrics, e.g., Cycle Time, to name one.

First of all, my metrics reflect progress (upper row) and quality (lower row). Imagine you will ship many features, but 50% of them contain bugs that negatively influence your customer. And vice versa, if your team takes ages to release a feature without a bug. The progress and quality of your team's output should be in balance.

Secondly, I focused on simplicity when I put the metrics together. I didn't want to execute advanced data aggregations or rely on specific tooling. All metrics can manually be measured and collected for a start. Automations followed later when I felt the value for the team.

Progress Metrics

Releases metric is comparable to the Velocity metric that you find in many project management tools except that I consider work only as done when released to Production. That measurement gives me a good indication of how many times we release our users' changes in a specific time range, like a week.

Furthermore, I check how many PRs got merged. The team can make progress not only with releases but also by finishing up the implementation of a task. For example, I want to cover when a feature contains different parts that get implemented individually, but the release happens after all parts are accomplished.

Quality Metrics

Keeping the quality high is essential because otherwise, we might affect the user experience negatively. Therefore, I check the number of Bugs Reported and review the bugs themselves. We need to learn from our bugs and prevent them in the future.

Failed Releases metric is the worst-case scenario that can happen. The team strives for high-quality releases, and we don't want to introduce new bugs with our releases. I measure the metric specifically because this is my red flag and gets full attention from me.

The metrics help me be aware of the team's progress weekly and identify anomalies (good or bad). Based on the information, I can analyze the situation and take action, e.g., help clarify a more complex feature.

Overall, I recommend that you don't become a slave of your engineering metrics. They will not magically solve problems for you. It is still your job to bring and understand them in your context and derive the right conclusions of it.